Arrange the sections on this page to see the updates you care about most at the top. Or, use the Recent Updates view in the community navigation to view all updates by time.

I have a HotSpot JVM heap dump that I would like to analyze. The VM ran with -Xmx31g, and the heap dump file is 48 GB large.

- I won't even try

jhat, as it requires about five times the heap memory (that would be 240 GB in my case) and is awfully slow. - Eclipse MAT crashes with an

ArrayIndexOutOfBoundsExceptionafter analyzing the heap dump for several hours.

What other tools are available for that task? A suite of command line tools would be best, consisting of one program that transforms the heap dump into efficient data structures for analysis, combined with several other tools that work on the pre-structured data.

Robin Green

Robin Green Roland IlligRoland Illig

Roland IlligRoland Illig9 Answers

Normally, what I use is ParseHeapDump.sh included within Eclipse Memory Analyzer and described here, and I do that onto one our more beefed up servers (download and copy over the linux .zip distro, unzip there). The shell script needs less resources than parsing the heap from the GUI, plus you can run it on your beefy server with more resources (you can allocate more resources by adding something like -vmargs -Xmx40g -XX:-UseGCOverheadLimit to the end of the last line of the script.For instance, the last line of that file might look like this after modification

Run it like ./path/to/ParseHeapDump.sh ../today_heap_dump/jvm.hprof

After that succeeds, it creates a number of 'index' files next to the .hprof file.

After creating the indices, I try to generate reports from that and scp those reports to my local machines and try to see if I can find the culprit just by that (not just the reports, not the indices). Here's a tutorial on creating the reports.

Example report:

Other report options:

org.eclipse.mat.api:overview and org.eclipse.mat.api:top_components

If those reports are not enough and if I need some more digging (i.e. let's say via oql), I scp the indices as well as hprof file to my local machine, and then open the heap dump (with the indices in the same directory as the heap dump) with my Eclipse MAT GUI. From there, it does not need too much memory to run.

EDIT: I just liked to add two notes :

- As far as I know, only the generation of the indices is the memory intensive part of Eclipse MAT. After you have the indices, most of your processing from Eclipse MAT would not need that much memory.

- Doing this on a shell script means I can do it on a headless server (and I normally do it on a headless server as well, because they're normally the most powerful ones). And if you have a server that can generate a heap dump of that size, chances are, you have another server out there that can process that much of a heap dump as well.

The accepted answer to this related question should provide a good start for you (uses live jmap histograms instead of heap dumps):

Most other heap analysers (I use IBM http://www.alphaworks.ibm.com/tech/heapanalyzer) require at least a percentage of RAM more than the heap if you're expecting a nice GUI tool.

Other than that, many developers use alternative approaches, like live stack analysis to get an idea of what's going on.

Although I must question why your heaps are so large? The effect on allocation and garbage collection must be massive. I'd bet a large percentage of what's in your heap should actually be stored in a database / a persistent cache etc etc.

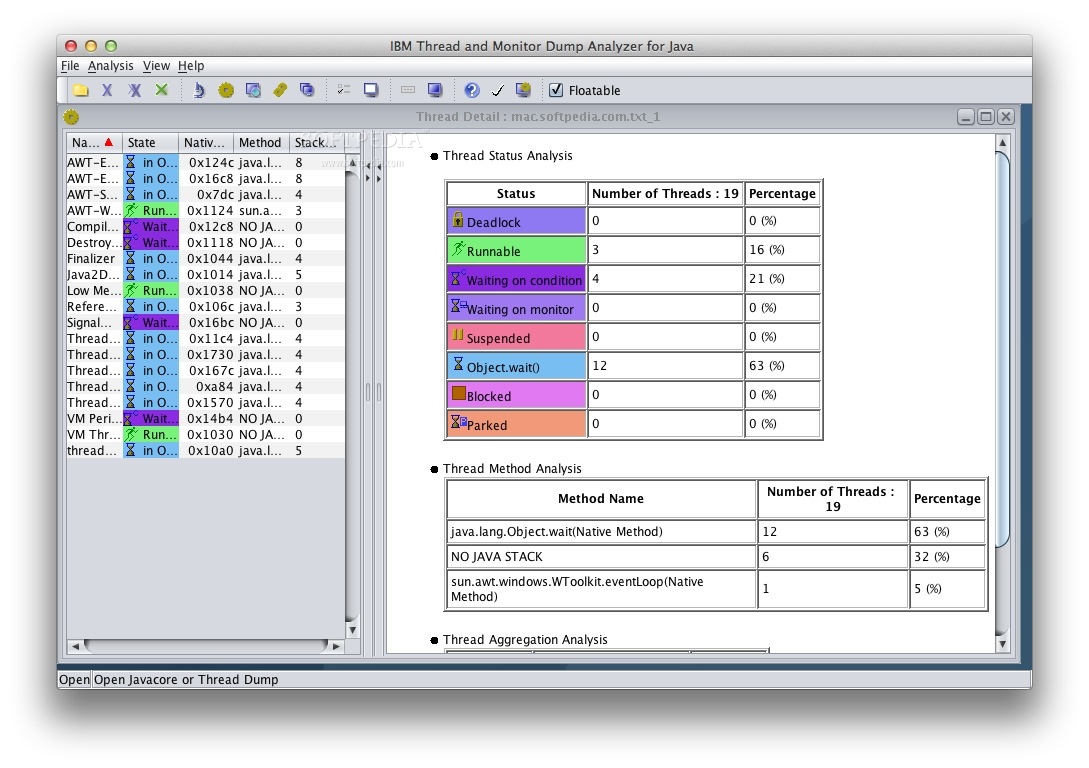

Online Java Thread Dump Analyzer

A few more options:

This person http://blog.ragozin.info/2015/02/programatic-heapdump-analysis.html

wrote a custom Netbeans heap analyzer that just exposes a 'query style' interface through the heap dump file, instead of actually loading the file into memory.

Though I don't know if 'his query language' is better than the eclipse OQL mentioned in the accepted answer here.

JProfiler 8.1 ($499 for user license) is also said to be able to traverse large heaps without using a lot of money.

rogerdpackrogerdpackI suggest trying YourKit. It usually needs a little less memory than the heap dump size (it indexes it and uses that information to retrieve what you want)

Best Thread Dump Analyzer

Peter LawreyPeter LawreyFirst step: increase the amount of RAM you are allocating to MAT. By default it's not very much and it can't open large files.

In case of using MAT on MAC (OSX) you'll have file MemoryAnalyzer.ini file in MemoryAnalyzer.app/Contents/MacOS. It wasn't working for me to make adjustments to that file and have them 'take'. You can instead create a modified startup command/shell script based on content of this file and run it from that directory. In my case I wanted 20 GB heap:

Just run this command/script from Contents/MacOS directory via terminal, to start the GUI with more RAM available.

rogerdpackA not so well known tool - http://dr-brenschede.de/bheapsampler/ works well for large heaps. It works by sampling so it doesn't have to read the entire thing, though a bit finicky.

rogerdpackThis is not a command line solution, however I like the tools:

Copy the heap dump to a server large enough to host it. It is very well possible that the original server can be used.

Enter the server via ssh -X to run the graphical tool remotely and use jvisualvm from the Java binary directory to load the .hprof file of the heap dump.

The tool does not load the complete heap dump into memory at once, but loads parts when they are required. Of course, if you look around enough in the file the required memory will finally reach the size of the heap dump.

Try using jprofiler , its works good in analyzing large .hprof, I have tried with file sized around 22 GB.

I came across an interesting tool called JXray. It provides limited evaluation trial license. Found it very useful to find memory leaks. You may give it a shot.